September 1, 2021

What Patients Really Think of Your Shiny New Clinical Algorithm

I have a soft spot for studies of studies—peer-reviewed research that examines other peer-reviewed research in search of broad trends and themes that individual studies may miss. Such meta literature reviews are a lot like journalism in that they’re looking at tiny pieces of evidence to tell a much bigger story. Maybe that’s why I like them. It’s scientific journalism!

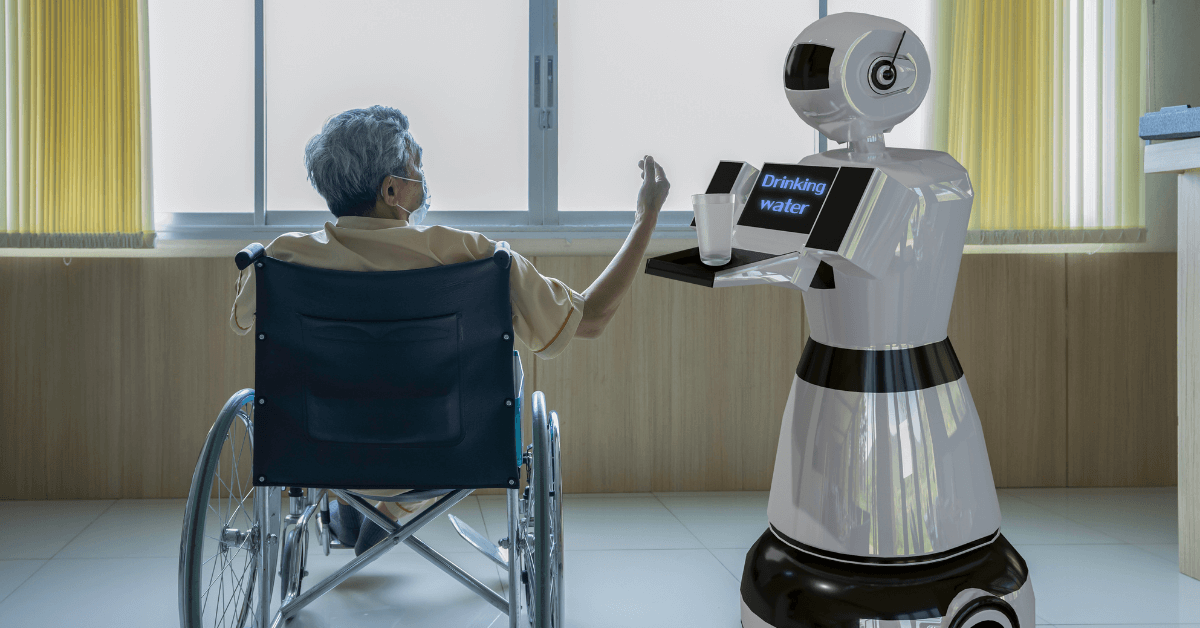

A new study of studies caught my eye the other day, and it reaches some broad conclusions about how people feel about artificial intelligence in clinical decision-making, commonly referred to as clinical AI. That’s different from administrative AI or financial AI, and you don’t need a meta review to know what people think about a computer telling them that can’t get an appointment with a medical specialist for three months, but they have to pay their surprise medical bill from their ER visit in 30 days or else.

The new study appears in The Lancet, and you can download it here.

Four researchers from the University of California in San Francisco, the University of Michigan and the Veterans Affairs Medical Center in San Francisco wanted to understand the attitudes of patients and the public toward clinical AI, arguing that their acceptance and understanding of AI is a potential barrier to the adoption of AI-powered technology in hospitals and physician offices.

To find out what patients and the public think about clinical AI, the researchers looked at nearly 2,600 pieces of research on the topic published from Jan. 1, 2000, through Sept. 28, 2020. In the end, only 23 of the studies met their research criteria and were part of their final meta review.

They defined clinical AI as “any software made to automate intelligent behavior in a healthcare setting for the purposes of diagnosis or treatment that might be directed towards patients, caregivers, or healthcare providers, or a combination.”

They separated the findings from the 23 studies into six themes:

- AI concept

- AI acceptability

- AI relationship with humans

- AI development and implementation

- AI strengths and benefits

- AI weaknesses and risks

In general, most of the patients and consumers in the 23 studies across the six themes were cool with using AI to diagnose and treat them but within limits.

For example, most patients and consumers weren’t too keen on completely autonomous clinical AI like chatbots that can diagnose symptoms and prescribe medications based solely on algorithms. They also preferred clinical AI as a complement or support for physicians and other clinicians, not as replacements for their providers.

Patients and consumers liked the potential of clinical AI to expand access to care, provide more accurate diagnoses and shorten time to treatment. They didn’t like clinical AI’s inability to verbally communicate with them or pick up on other factors affecting their conditions like social determinants of health.

“Participants strongly preferred to keep providers in the loop, maximizing the individual strengths of healthcare providers and AI,” the researchers said.

So why is a study of studies like this important from a market perspective?

Any business in any industry that wants to succeed needs to know what its customers think. Hospitals, health systems and medical practices are businesses, and if they want to succeed, they need to know what their customers think.

If hospitals, health systems and medical practices want to adopt AI-powered technologies to improve clinical outcomes—or reduce clinical headcount—they should know what their patients will and won’t accept before they press the power button.

Clinical AI is about patients, not the technology.

To learn more on this topic, please read:

- “How Can Healthcare Avoid Screwing Up AI’s Potential?”

- “What Healthcare AI Adoption and the Old Kiddieland Amusement Park Have in Common”

Thanks for reading.